- Create an Evaluation Plan

-

Your evaluation plan will help you to:

- Stay organized

- Use financial and staff resources wisely

- Stay accountable along the way.

To help you plan, download:

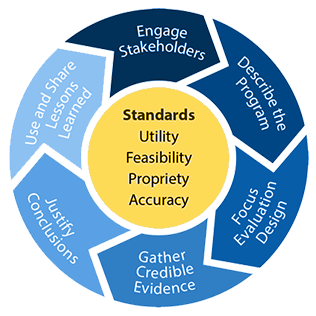

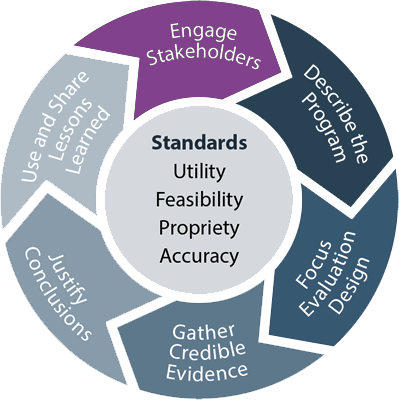

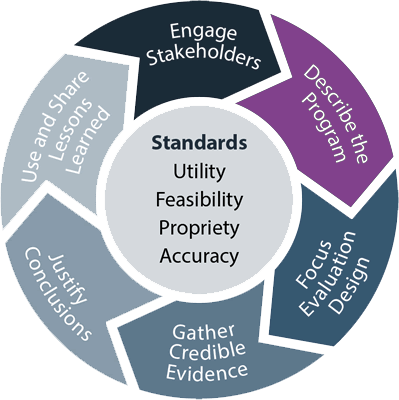

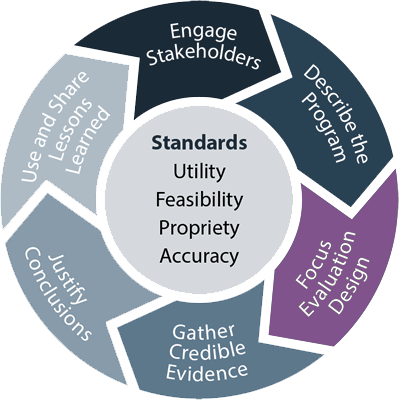

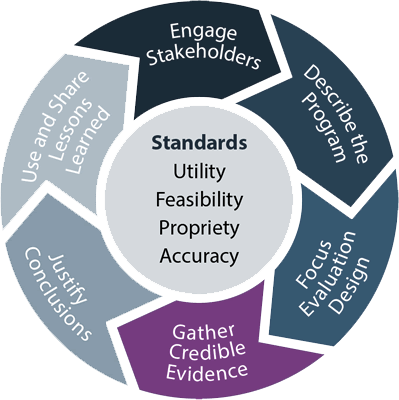

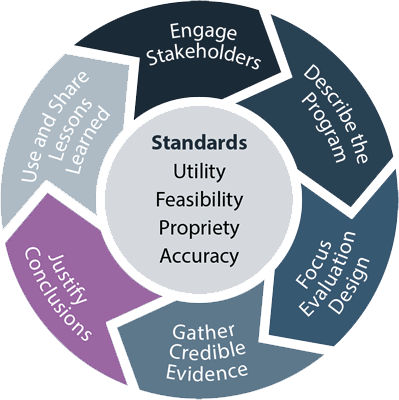

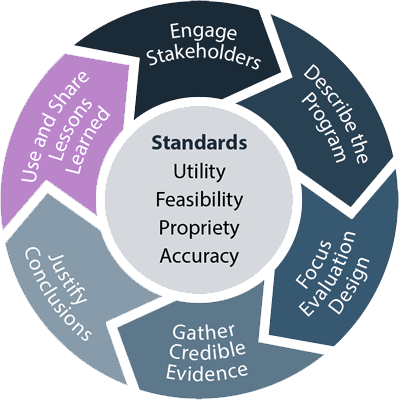

Use the steps below from CDC’s Framework for Program Evaluation to develop your plan.

Start with those involved in the Planning phase.

- Engage a diverse set of stakeholders in all steps of the evaluation process.

To help you plan, download:

Logic models can help describe the approaches. They can identify the inputs, activities, outputs, and outcomes.

- For more information about building your logic model, visit evaluACTION.

To help you plan, download:

Determine the most important evaluation questions and select the most appropriate methods for answering them.

Choose indicators that address evaluation questions in a meaningful way. Don’t forget to describe your information sources. Consider multiple data sources for gathering, analyzing, and interpreting data.

- Gather the best possible data by using culturally appropriate tools and methodologies. These data should consider factors like the population’s language needs, literacy levels, and facilitator preferences. Choose relevant variables so that sufficient data are gathered, tracked, and analyzed.

Analyze and interpret the evidence gathered to develop recommendations.

Create a dissemination plan that ensures that the evaluation processes and findings are shared and used appropriately. Communicate your results to relevant audiences in a timely, unbiased, and consistent manner.

To help you plan, download:

Apply Evaluation Standards

This helps ensure that evaluations are sound and fair. The best evaluations are designed to be:

- Useful and serve the information needs of various audiences

- Feasible, realistic, cost-effective, and practical

- Conducted legally and ethically, with regard for the welfare of the affected people, groups, and communities

- Accurate, revealing, and conveying correct, truthful information

For more information on the Framework, visit CDC Program Performance and Evaluation Office’s website - https://www.cdc.gov/eval/framework/index.htm

Evaluation: Keep Your Effort on Track

- Select Indicators and Identify Data Sources

-

To track changes in desired outcomes, you will want to select specific indicators that you define in your evaluation plan. Indicators are measurable information used to determine if a program, practice, or policy is implemented as expected and achieving desired outcomes.

- Process indicators can help define activity statements such as “good coalition,” or “quality culturally-competent training.”

- Outcome indicators can help you measure changes in desired outcomes. Outcome indicators help guide the selection of data collection methods and the content of data collection instruments.

For more information on selecting indicators, download:

To select indicators for your sexual violence prevention work, use:

Once you have determined the indicators you will use to measure progress, you need to select data sources. A key decision is whether there are existing data sources, such as publicly available data, for tracking your indicators or whether you need to collect new data.

Data collection methods also fall into several broad categories. Among the most common are:

- Surveys

- Group discussions or focus groups

- Individual interviews

- Observation

- Document review, such as implementation logs, meeting minutes, and agency records

Many national, state, and local sources of publicly available data may help with planning and evaluation. The data sources available to you depend in part on the jurisdiction and the level of data you seek. Reach out to epidemiologists in your community to identify data sources and examine data quality and utility.

- Process and Outcome Evaluation

-

You can conduct different types of evaluations depending on the questions you are trying to answer, how long the program has been in existence, who is asking the question, and how you will use the information. Process evaluation can inform outcome evaluation and vice versa. For example, if the outcome evaluation shows that your strategies and approaches did not produce the expected results, it may be due to implementation issues.

Process evaluation is also called implementation evaluation. A process evaluation covers the what, how, and who of the approaches in your violence prevention plan. It can document that the essential elements of your approaches are carried out as intended and help you describe the context, as well as measures such as reach and dose. Process evaluation is important to help distinguish the causes for successful or poor performance. This will help improve implementation.

See how New Jersey conducted its process evaluation.

Outcome evaluation determines whether your strategies and approaches are achieving the desired results. Here are some potential outcomes:

- Trends in morbidity and mortality, and risk and protective factors related to violence

- Changes to the environment

- Changes in social norms

- Access to resources

- Behaviors, attitudes, and beliefs

See how Kansas conducted its outcome evaluation.

For more information about evaluating injury prevention policies, review NCIPCs Policy Evaluation Briefs found in the Resource Center.